Written in Chinese, translated by Claude Sonnet 4.5.

I recently came across several papers that all touch on a topic I’m very interested in:

What new things can current AI intellectually teach humanity?

Humanity’s Last Exam (HLE)

It’s old news that general-purpose large language models (LLMs) surpass any “individual human” in general intellectual capacity. There’s a dataset called “Humanity’s Last Exam” (HLE)1, which is one of the hottest benchmarks for testing LLM capabilities today.

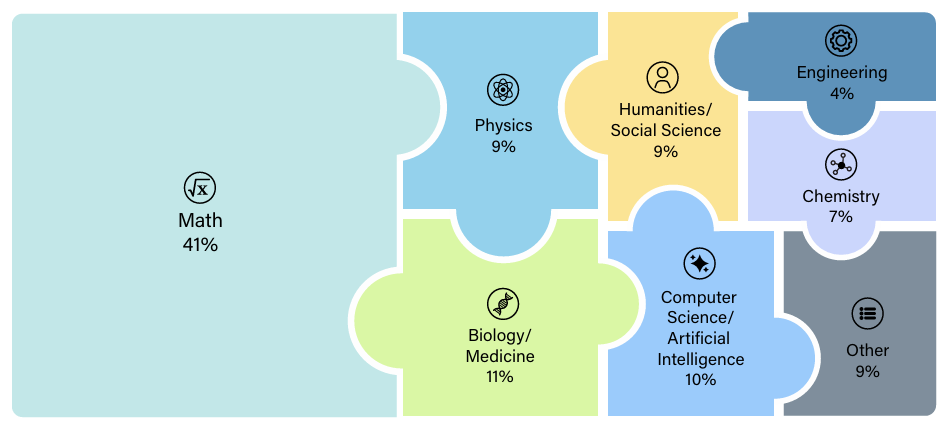

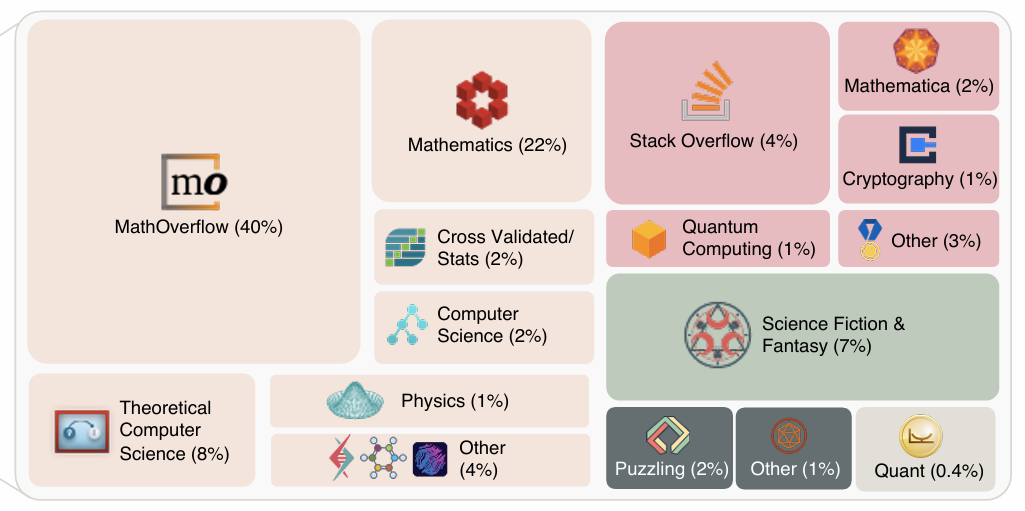

This exam was designed by nearly 1,000 experts from various fields. From an initial pool of over 70,000 questions, through layers of automatic filtering and rigorous manual review, 2,500 questions were ultimately selected. Of these, 24% are multiple-choice questions, and the rest are fill-in-the-blank/problem-solving questions. Mathematics accounts for 41%, biology and medicine 11%, computer science 10%, physics 9%, humanities and social sciences 9%, and so on:

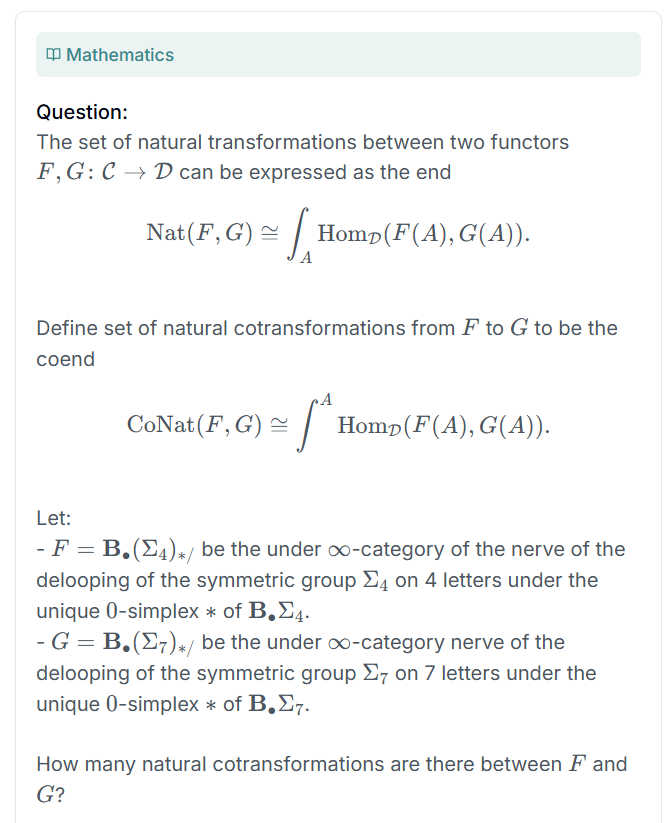

Math questions look like this:

I majored in mathematics as an undergraduate, and I still can’t understand it. Claude says this is an algebraic topology and category theory problem, suitable for doctoral-level coursework. It explained the gist of the question, but the prerequisite knowledge is so vast that I still can’t comprehend it for now.

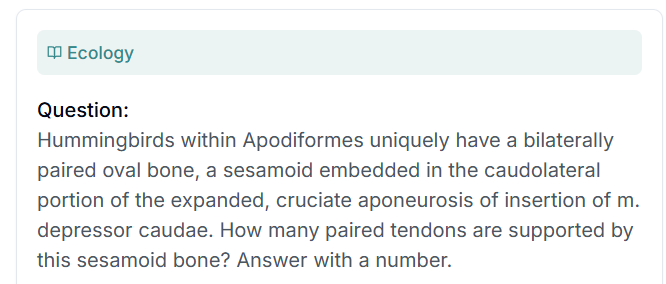

Let’s try one where at least the question itself is understandable. Ecology:

This tests extremely obscure and unsearchable knowledge.

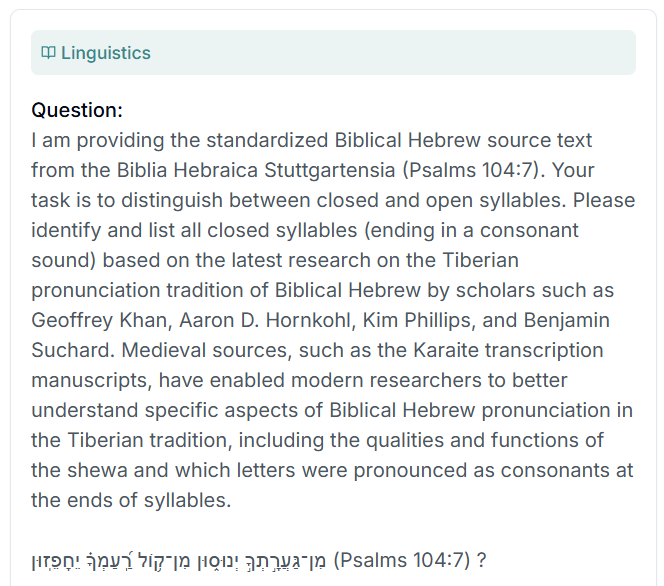

Finally, a linguistics question:

This tests the ability to apply methodologies from existing literature to make inferences on new material.

When “Humanity’s Last Exam” was first published, the most powerful model was still o3-mini (January 2025), with only a 13.4% accuracy rate. Currently, the highest-ranked on the official leaderboard is gpt-5 (August 2025), with a 26% accuracy rate. Unofficially, grok-4-heavy, which uses a multi-agent architecture, claims to have reached 44%.

I haven’t found any data showing how “individual humans” would perform on these 2,500 questions, but subjectively I believe it’s impossible to exceed 44%. Even if you resurrected Hilbert, the last polymath in mathematical history, he would be capped by the 41% of math-related problems.

If you want to know the answers, download the dataset here. They didn’t put the plaintext online to avoid having the text incorporated into LLM training corpora and causing “leaked questions.”

However, at least the answers to these questions are already known to “all of humanity.” Each individual person still has some metaphysical consolation.

Unsolved Questions (UQ)

However, recently there’s a new “Unsolved Questions” dataset (UQ)2. The paper’s authors selected 500 questions from StackOverflow that have never been answered by users. These questions all have high user attention (views) and votes, and have been filtered by LLMs and humans to identify those that are “clearly defined, highly difficult, solvable, and objective.” Among the 500 questions that meet these criteria, math questions still account for the majority.

But there are also many relatively everyday questions like “I read a science fiction novel as a child about XXXX, does anyone know the title?”, “Are there historical documents recording ancient India’s ‘creatures in stone’ (such as turtles and toads)?”, “Let me give you all a riddle,” and so on.

But if we ourselves don’t (yet) know the answer, how can we judge whether an LLM’s response is “correct”?

This itself is a very interesting question. The paper authors’ solution is to have LLMs form a pipeline with clear division of labor for step-by-step review. Because generally speaking, “verifying whether an answer is correct” is much easier than “providing a correct answer.”

I won’t detail the specific review scheme, but roughly it involves having LLMs separately check whether the answer is relevant and complete to the question, whether there are critical flaws in detailed citations and logic, with multiple independent reviews, ultimately requiring unanimous/majority approval. Personally, I think there are many aspects that could be debated and improved. For example, one step in the scheme detects whether there are logical errors in details in the LLM’s answer, and once found, judges the final answer as incorrect. This is generally feasible for math questions, but other answers that don’t require strong logical chains will be mistakenly penalized.

For example, the following example seeking a science fiction book title (original question link):

70s book/short story, Man wakes up from suspend animation or cryo and world has collapsed

In the book, the protagonist wakes up from either suspended animation or cryo (Probably supposed to be a short test), After 10-20 years have passed.

He finds the facility abandoned (and looted?), gets to the surface and it looks like a war zone. Wreckage everywhere, smashed buildings, bullet holes everywhere. (I think he gets some gear from the facility, MREs, Water, First Aid kit, Weapons) and goes exploring to find out what happened.

He comes across a (young kid?) who is terrified of him, (maybe trapped or pinned under debris), gives him food and water and digs him out or helps him so the kid trusts him. Eventually the kid leads him to his family (mother & other siblings) who are equally terrified when they see him, but the kid convinces them the protagonist is OK, that he saved his life.

None of the children are really old enough to remember anything from before the protagonist enter the cryo test, but the mother is. He starts questioning her about what happened while he was asleep. She tells him it was NOT another country invading, it was all internal (society collapsed, food shortages, massive riots).

Later, I think they hear a large group of people approaching the (town? city? area?) where the family has been living, and the family becomes frightened again, saying the enemy is coming back.

He says he will help defend them and asks what the enemy looks like. She looks at him and says “They look just like you.” He is African American, the implication being that African-American uprisings are what caused the riots and collapse of society.

This might have been a short story/novella. It probably was a paperback in English published in the USA.

I may be misremembering, maybe she did not tell him they look like you, he just went and got into a position to defend against >the enemy, and when they approached, realized they were all African-American.

o3-pro’s answer to this question is:

The story you’re remembering is Wilson Tucker’s short novel / long novella “The Year of the Quiet Sun” (first published in 1970; Hugo- and Nebula-award nominee).

How it matches the details you gave

- How the protagonist gets into the future

- Instead of cryogenic sleep, Army Captain Addison “Add” Douglass (an African-American Vietnam-era soldier) is one of a small U.S. government team that tests a brand-new time machine in 1978.

- After a couple of short proving jumps he is sent about twenty years forward, to the year 2000. From Douglass’s point of view it is an almost instantaneous “sleep” and awakening, exactly the way you remembered it.

- Abandoned underground facility / gathering supplies

- The time-gate is in a sub-basement under the University of Chicago. When Douglass materialises in 2000 the building is wrecked and deserted.

- He salvages rations, water, weapons and medical supplies that the project planners had cached for returning travellers.

- A devastated city that looks like a war-zone

- Chicago is in ruins: burned-out cars, bullet-scarred buildings, smashed storefronts. The United States has broken up after years of food shortages, riots and guerrilla fighting.

- Meeting a frightened child, then the child’s family

- Douglass finds a white boy (David) trapped under rubble, frees him, gives him water and food and gains his trust.

- David takes him to his widowed mother, Judith, and her two younger children, who are at first terrified because a Black man has suddenly turned up.

- “It wasn’t a foreign invasion – it was internal”

- Judith explains that what destroyed the country was not an outside enemy but a bitterly escalating series of internal conflicts—racial violence, food riots, martial law—until civil society collapsed.

- “What do the enemy look like?” – “They look just like you.”

- Judith warns Douglass that a band of marauders is coming back. When he asks how he will recognise them, she says, “They look like you.” The attackers are Black militants; Douglass, being Black himself, finally understands why the white family were so afraid of him.

- Length, publications and era

- The story is only about 45 000 words (roughly 150–180 paperback pages) so it often appears in anthologies and “best novella” collections from the 1970s onward.

- First U.S. paperback printing was in 1970 (Ace Books).

Although the mechanism is time travel rather than literal cryogenic sleep, every plot element you recalled—the lone Black protagonist suddenly 20 years out of his own time, the looted underground installation, the ruined city, rescuing a boy, the fearful white family, and the climactic realisation that the “enemy” is people who “look just like you”—comes straight from Wilson Tucker’s “The Year of the Quiet Sun.”

After this answer was posted to the original Q&A site by the paper authors, a human user considered it “incorrect” because point 7 mentions that if calculated as a paperback, the novel should have 150-180 pages, but Wilson Tucker’s “The Year of the Quiet Sun” has 252 pages. This user commented: “If even this is wrong, how can we trust the other parts (of the answer)?”

Finally, the original poster replied, confirming that this book was indeed the one he had read before.

Currently, this question is still considered in the dataset as “no answer has passed correctness verification.” I specifically singled out this example because this kind of “wrong-judging” mentality is very typical and very familiar: seizing on a detail error, then happily rejecting everything wholesale.

Incidentally, including the above example, almost all posts published by the paper authors on StackOverflow were deleted by administrators because the site strictly controls AI-generated content. This is also very fitting for the current times.

Reading these unsolved questions, LLM answers (whether “correct” or not), LLM verification reasoning, and human review opinions is actually quite interesting. Attempts, thoughts, and verification processes exploring the boundaries of intellect are actually publicly available in bulk in text form.

Unlike “Humanity’s Last Exam,” these are questions that individual humans actually want to know the answers to. I recommend everyone check out their official website, especially to look at questions related to your field. Two that impressed me:

- Given the absolute values of adjacency matrix eigenvalues, solve for the signs of eigenvalues. This is in a computational domain I’m relatively familiar with. The solution given by gpt-5 is clever and feasible, and the complexity analysis also hints at the computational concerns of the original question to some extent. If it were me back when I was still in school, I might have been able to quickly try some solutions. Now I’d probably have to spend a lot of time reviewing knowledge and re-sharpening my tools.

- What is the earliest land route between Sweden and Finland. This seemingly searchable knowledge question actually doesn’t have an obvious answer, because the questioner requires that this road be historically documented and built for a “specific purpose” (such as a post road), rather than a commercial route naturally formed by the public. gpt-5’s answer first confirms that Sweden’s earliest royal postal network was established in 1636 and included the coastal road Kustlandsvägen, while the coastal road Pohjanmaan rantatie in Finland, which was then under Swedish jurisdiction, became an official postal road in 1638 after renovation and upgrade. Both extended to meet at the border island of Torneå (now part of Finland, today filled in to become a peninsula). At that time, Torneå relied on ferries in summer, but could use the ice surface in winter (the first bridge was built in 1919), connecting the postal roads on both sides. So gpt-5 believes that this three-in-one road combination can meet the questioner’s requirements. This is an interesting example of constructing abstract things from historical materials that meet definitional clauses.

The idea behind the “Unsolved Questions” dataset is: collect questions that have not yet been answered by humans, screen out the most likely AI answers through an LLM automated verification pipeline, and submit them to human experts or original questioners for final confirmation. Because the paper was published 3 weeks ago, currently only 10 of the 500 questions have been verified by humans as having correct answers, mostly math-related. The authors believe this can both provide a new (approximate) benchmark for LLMs and help human users get the answers they want.

However, no matter how difficult to answer, these questions have at least “been asked,” and the answers can still be described in language.

So, for new knowledge discovered by AI that cannot (yet) be described in language, how should it be disseminated to humans?

AI Teaching Chess Grandmasters

First, this knowledge/skill already exists. AI has long completely defeated humans in closed environments like chess and Go, and its chess skills far exceed the strongest human players. Many professional chess players today are learning AI’s strategies. However, being able to win at chess and being able to clearly explain why you play this way and pass on “knowledge points” or “skills” to another chess player are two different things. People who have played chess know that many excellent moves are guided by “intuition” developed through long-term training. And the language and text attempting to explain these moves afterward appear pale and powerless compared to the complexity contained in the moves themselves.

But early this year, a paper3 used AlphaZero (AZ) to teach four grandmasters who have won world chess championships some new techniques. These four grandmasters are:

- Vladimir Kramnik: World Champion 2000-2007. Historical highest rating rank: 1

- Dommaraju Gukesh: Current World Champion in 2024. Historical highest rating rank: 3

- Hou Yifan: Women’s World Champion 2010-2017. Historical highest rating rank: 55

- Maxime Vachier-Lagrave: Youth World Champion 2009. Historical highest rating rank: 2

AZ is not a language model; it doesn’t speak, it only plays chess. It can judge the quality of board positions, but these judgments are hidden in a large number of black-box numerical values inside the model, difficult for humans to interpret.

This paper is about how to extract chess moves that humans don’t yet know from them.

The rough steps are:

- First find numerical patterns that AZ only commonly exhibits when it has “made a good move” (by its own judgment)

- From these numerical patterns, filter out those that also appear in human chess games

- From these numerical patterns, filter out those that AZ itself also exhibited during early training

- Use the remaining numerical patterns to continue training early-stage AZ, then see if it can imitate the same good moves as the final AZ. Filter out numerical patterns that cannot be learned

After the above 4 steps, the remaining “excellent,” “unknown to humans,” “advanced,” “learnable” numerical patterns are called “concepts” by the paper authors.

Then, use a single concept to reverse-match corresponding multiple historical game states. This means that in that position, AZ once used that “concept” to make a good move. Thus, board states related to the same concept can be seen as practical “examples” describing that concept.

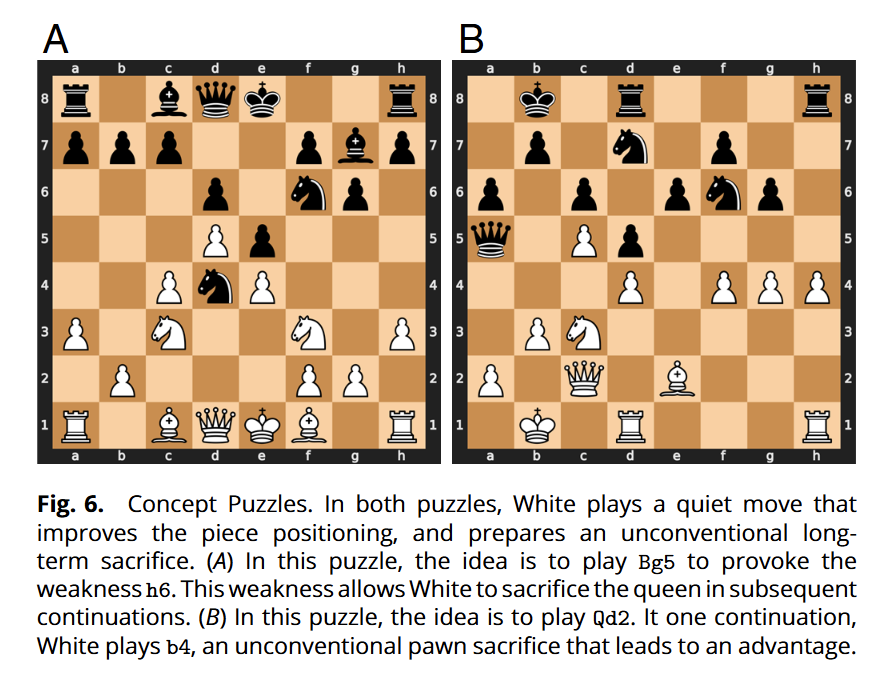

Below shows two different game groups for a certain concept. Friends who know chess can examine them. According to the author’s (the first author is a professional chess player) comments, this belongs to an uncommon sacrifice for positional advantage.

Next, have the chess grandmasters try to make the next move in several game examples related to the same concept, to see if their decisions match AZ’s “good move.” After making the move, tell them what AZ’s “good move” was.

After several rounds like this, did the grandmasters perhaps learn something? The paper authors had the grandmasters play in several new games related to the same concept. The results showed that all four masters’ new moves matched AZ’s “good moves” more than before.

Since AZ’s chess skills far exceed those of the four masters, this experiment proves that its advanced knowledge can “teach” grandmasters, who represent the upper limit of human chess ability, new techniques.

The four grandmasters had their own comments on the experimental process. They believed these games were “very complex—not easy to figure out how to play.” When analyzing AZ’s good moves, they also found them interesting: “good idea, also hard to think of,” “clever,” “very new,” “very useful… doesn’t feel very natural,” “very interesting.” Some even reflected afterward that their moves different from AZ were “too emotional,” and although “technically strong,” would show weaknesses in subsequent steps.

Not all extracted “concepts” were useful. One grandmaster found that AZ’s (playing black) certain strategy was an impractical “active draw”:

“… this is a very clear and important theme to understand. So, g4, the move it proposes, in a practical sense it’s a very big move, because you see, in such situations, the engine already knows the final result. For engine it doesn’t matter which move it plays because it calculated it’s a draw, but g4 is basically forcing it. After g4 Black has no winning chances, but otherwise I have a feeling that after Black plays let’s say Qd2, it’s not … easy practically for White to make the draw. For an engine it’s ok, but practically no one would play it because g4 is basically offering a draw- and with other moves Black is running zero risk, yet has practical chances to win the game if White makes a mistake. An engine doesn’t understand the concept of practical play- while this is a draw, it’s not an easy draw for White. g4 is one of many moves leading to a draw, but in a practical sense the worst one as it gives Black zero chances to win. So that is my understanding, that it’s not the objective best move. Practically definitely a wrong move.”

In other words, AZ is convinced this game should be a draw—if the opponent is also a chess player at AZ’s level (AZ’s training method is to play against itself, so this assumption is reasonable for it). It doesn’t consider the possibility that the opponent might be at a lower level, so it doesn’t play moves that would put more positional pressure on the opponent, but directly forces a draw. But the grandmaster indicates that the extra pressure would make opponents at human-level struggle and possibly make mistakes and lose.

This paper is very interesting. It designs a process for extracting paradigm examples containing “unique techniques” from the massive black-box numerical values representing AI experience. If there are some new, complex concepts that are difficult to describe in plain traditional language, then we can at least precisely construct/search for instances containing these concepts, similar to Zen “koans,” allowing people to “learn by doing.”

AI is already expanding the boundaries of human intellect. This is just the beginning.

Just like the top experts in various fields before, who spent their entire lives, some of whom made unprecedented contributions and became solid stepping stones for future generations. These discoveries, summaries, theories, and innovations have forged every aspect of our modern technological life, safely wrapping ordinary people within. These true experts are far from each individual person’s life—because they also have their own lives—so ordinary people can only access the intellectual foundation they collectively built through textbooks, popular science, media, and other second-hand information.

But now, AI with these intellectual capabilities is in the APP/API at your fingertips, ready for your use at any time. In an instant, people have become intellectually free.

“The best minds of my generation are thinking about how to make people click ads.”

- Jeff Hammerbacher, 2011

The above quote was originally a helpless complaint by a big tech employee about the state of society, but today it has become a deafening practical question:

OK, now you have a choice—so what do you want them to do?

This is a very marvelous crossroads in time. Perhaps also the last wonderful journey of Homo sapiens explorers.

Humanity’s Last Exam: https://lastexam.ai/, 2025. ↩︎

UQ: Assessing Language Models on Unsolved Questions, https://uq.stanford.edu/, 2025 ↩︎

Schut et al, 2025. Bridging the human–AI knowledge gap through conceptdiscovery and transfer in AZ. https://www.pnas.org/doi/epdf/10.1073/pnas.2406675122 ↩︎